AI Reasoning Models: How to Get Smarter Answers from Your AI

Learn when to use reasoning models for decision-making, problem-solving, and everyday AI tasks.

Sometimes, AI just gives you an answer. Other times, it actually reasons through a problem. What’s going on there—and does it matter for you?

If you follow AI news, you’ve probably heard a lot about reasoning models. You might have even seen discussions about their ability to solve tricky logic puzzles or advanced math problems. But what does that mean for you? Are they just a tool for researchers and engineers, or can they actually help with everyday tasks?

As someone who uses AI in everyday life and work, I’ve been trying to figure out where reasoning models actually matter for people who aren’t crunching numbers at NASA. Are they a big deal for planning your budget? Scheduling your week? Figuring out how many tiles you need for a home project?

This article breaks down what reasoning models do, how they differ from standard AI, and—most importantly—whether they’re useful in real-world, day-to-day situations.

What Are Reasoning Models?

At their core, reasoning models are a type of large language model (LLM) designed to handle structured problem-solving, logical reasoning, and multi-step decision-making more effectively than traditional AI models.

Another way to think about it:

Traditional LLMs are like students who memorized the textbook. They can recall a lot of information but might struggle to explain how they got an answer.

Reasoning models are students who don’t just memorize—they work through problems step by step, showing their process along the way.

Examples of Reasoning Models

Several AI models today are designed with enhanced reasoning capabilities:

GPT-4o (OpenAI) – A general-purpose model with strong reasoning skills, useful for structured problem-solving and analysis.

DeepSeek R1 – A model focused on improving logical reasoning and efficiency, often performing well on mathematical and scientific tasks.

Claude 3.7 Sonnet (Anthropic) – Known for its ability to follow instructions in a step-by-step manner and reason through complex problems with a structured approach.

Gemini 2.5 (Google DeepMind) – Designed to integrate multimodal reasoning (text, images, and more) to tackle broader, logic-heavy challenges.

Unlike standard LLMs, reasoning models excel at breaking problems down into steps, reducing errors when solving logic-based tasks. But does that mean you should always use one? Not necessarily.

When Do They Matter?

Most of the time, if you ask an AI a question, it just spits out an answer. That works fine for things like summarizing articles, generating ideas, or answering general knowledge questions. But what if you need AI to think through a problem instead of just reacting?

That’s where reasoning models come in. They’re designed for multi-step decision-making, structured problem-solving, and tasks that require logical thinking rather than just pattern-matching.

For example, consider situations like financial planning, travel logistics, or home improvement. These are areas where small mistakes can add up, and a model that “thinks” through the process can be a game changer.

Let’s take financial planning as an example. Say you ask AI about loan interest:

Prompt:

"How much interest will I pay on a $10,000 loan over five years at 6% interest?"

How a Traditional LLM Might Respond:

"At 6% interest over five years, your total interest will be around $3,000."

How a Reasoning Model Responds:

"To calculate your total interest, we assume a fixed 6% annual interest rate. Using the simple interest formula (Principal × Rate × Time), we get:

$10,000 × 0.06 × 5 = $3,000 in total interest.

If this is a compound interest loan, the total amount will depend on compounding frequency."*

Both models give an answer, but the reasoning model shows the steps, making it easier to verify or adapt to different scenarios.

In a previous article, I suggested having AI write a quick Python script for multi-step calculations since traditional LLMs often get math wrong. A reasoning model is another alternative—it doesn’t replace the precision of a well-written script, but it’s much better at showing its work than a standard LLM.

Where Else Are Reasoning Models Useful?

Here are a few everyday situations where reasoning models tend to outperform standard LLMs:

Complex Scheduling – If you’re juggling multiple people’s availability for a trip, a traditional LLM might give general advice, but a reasoning model can analyze multiple schedules and suggest an optimal plan.

DIY & Home Improvement – Need to figure out how many tiles you need for a room? A reasoning model can do the math step-by-step, reducing the risk of miscalculations.

Trip Planning & Logistics – Traditional LLMs can suggest places to visit, but a reasoning model can plan an efficient route, factoring in driving time, gas stops, and hotel stays.

This doesn’t mean reasoning models are necessary for everything—you wouldn’t need one for casual writing or brainstorming—but for anything that involves structured thinking, they can make a real difference.

Should You Care?

If you use AI casually—to brainstorm ideas, summarize articles, or chat about random topics—reasoning models might not seem like a big deal. But if you’re using AI for anything that involves structured problem-solving, logic, or calculations, understanding how to prompt these models effectively can make all the difference.

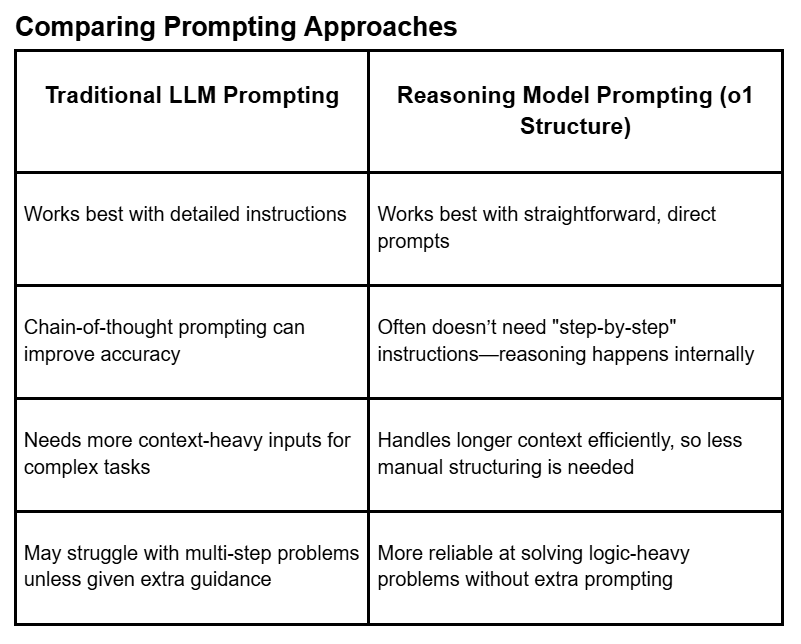

Prompting Differences: How to Get the Best Responses from Reasoning Models

If you’ve played around with AI prompts before, you know that how you ask a question can drastically change the response. With traditional LLMs, getting the best answer often means guiding the AI with structured instructions, like:

• The Template Pattern (forcing AI to follow a structured format)

• The Chain-of-Thought Pattern (telling AI to explain step by step)

• The Flipped Interaction Pattern (making AI ask clarifying questions first)

But reasoning models, like GPT-4o, DeepSeek R1, Claude 3.7 Sonnet, and Gemini 2.5, work differently. They don’t always need as much prompting because structured reasoning is built in. This means you can often get a high-quality answer with fewer instructions.

Here’s how traditional LLMs and reasoning models compare when it comes to prompting:

Example 1: Investment Advice

Let’s say you’re trying to figure out the best way to invest $5,000 over 10 years.

Traditional LLM Prompt:

"I’m 35 years old with $5,000 to invest. Give me an investment strategy for 10 years. Show your reasoning step by step and explain any assumptions."

Reasoning Model Prompt:

"I’m 35 and want to invest $5,000 for 10 years. What’s my best strategy?"

Expected Difference in Responses:

A traditional LLM might list investment options and provide general financial advice, but it may not logically connect everything.

A reasoning model is more likely to analyze risk factors, project returns, and adjust recommendations based on timeframe and risk tolerance—even without being explicitly told to "show its reasoning."

Example 2: Trip Planning

Now, let’s say you’re planning a week-long vacation across multiple cities and want the most efficient travel itinerary.

Traditional LLM Prompt:

"Plan a 7-day road trip from New York to Miami, visiting at least three major cities along the way. Optimize for scenic routes and include recommended stops. Provide your reasoning step by step."

Reasoning Model Prompt:

"I’m taking a 7-day road trip from New York to Miami and want the best itinerary, including scenic routes and interesting stops."

Expected Difference in Responses:

A traditional LLM might suggest a list of cities and attractions, but without truly optimizing for efficiency. It might even provide a generic road trip plan that doesn’t account for travel time.

A reasoning model is more likely to calculate optimal driving times, balance sightseeing with rest periods, and sequence stops logically—without needing extra prompting.

Practicality Considerations: Are Reasoning Models Always Better?

So, should you use a reasoning model all the time? Not necessarily. They have their downsides too:

Slower Responses – Since they actually “think” through problems instead of blurting out answers, reasoning models take longer to respond. That’s great for accuracy but not ideal if you just need a quick answer.

More Processing Power – These models demand more computing resources, which means they might run slower on free-tier AI tools or lower-end devices.

Not Always Worth It – If you just need AI to whip up an email, generate a quick list of ideas, or summarize an article, a reasoning model is overkill. A regular AI model will likely be just as good—and a lot faster.

Think of it like using a scientific calculator for basic addition. It’ll get the job done, but do you really need all that extra processing power just to solve 2 + 2?

Final Thoughts: When It’s Worth Using a Reasoning Model

You might not need a reasoning model every day, but when you do, it can make a real difference. Whether you're planning a trip, crunching numbers, or tackling a logic-heavy problem, the right AI tool can mean the difference between a helpful answer and a half-baked guess.

Not sure if a reasoning model is worth it? Run a little experiment. Ask the same question to a regular AI model and a reasoning model. See which one actually thinks through the problem instead of just throwing out an answer. You might be surprised at the difference.

What Do You Think?

Have you tried a reasoning model yet? Did it improve your results? Drop a comment below and share your experience!